This post continues the exploration of predicate logic, highlighting its expressiveness compared to propositional logic, discussing its limitations, and outlining applications in various fields.

Advanced Expressiveness Compared to Propositional Logic

Propositional logic treats whole sentences as indivisible. Predicate logic can talk about individuals and relations between them, and it can express generality and existence. Here are some patterns that propositional logic cannot capture.

- Universal and existential statements

Everyone has a parent:

\(\forall x\; \exists y\; Parent(y, x)\).

Propositional logic would need one separate atom for each individual; it has no quantifiers. - Sameness across occurrences (uniqueness)

There is exactly one king:

\(\exists x\; ( King(x) \wedge \forall y\; ( King(y) \to y = x )) \).

The second part ensures that anything else called a king must be that same (x). - Describing how a binary relation behaves

With a binary predicate symbol \(\le\), the following package of sentences makes \(\le\) behave like “less‑or‑equal” on numbers:

\(\forall x\; (x \le x)\)

\(\forall x\;\forall\; y\forall\; z (x \le y \wedge y \le z \to x \le z)\)

\(\forall x\;\forall\; y (x \le y \wedge y \le x \to x = y)\)

\(\forall x\;\forall\; y (x \le y \vee y \le x)\)

Propositional logic cannot impose such structural constraints on a relation. - Talking about functions using the language itself

With a unary function symbol \(f\), we can express properties like:

- Different inputs give different outputs:

\(\forall\; x\forall\; y ( f(x) = f(y) \to x = y )\). - Some element is never hit by \(f\):

(\exists y\; \forall\; x ( f(x) \neq y )\).

- Quantifier order matters

\(\exists x\; \forall\; y Likes(y, x)\) vs \(\forall\; x \exists y\; Likes(x, y)\)

These say genuinely different things.

Takeaway: Quantifying over individuals and naming relations or functions lets us axiomatize rich structures inside the language itself. This is the key gain over propositional logic.

Limitations of Predicate Logic

The language is intentionally restrained. Here are natural‑sounding properties that cannot be captured by a single sentence.

- Forcing the domain to be finite or infinite with one sentence

It is possible to write sentences that rule out domains of size 1, then size 2, and so on. For example

\(\forall x\; \forall y\; x=y\) implies there is only a single element in the domain.

\(\forall x\; \forall y\; \forall z\; x \ne y \Rightarrow (z=x \vee z=y)\)implies there is are at most two elements in the domain.

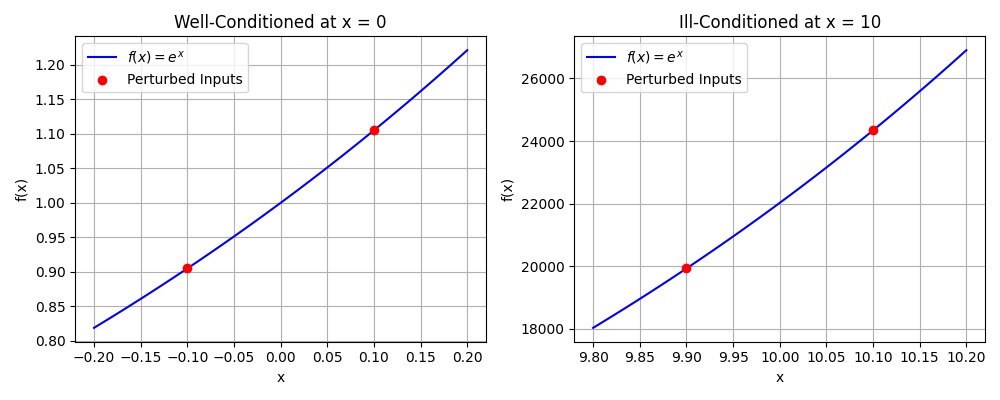

But there is no single sentence that says “the domain is finite” or “the domain is infinite.” Intuitively, a sentence has finite length; it cannot rule out all larger and larger finite cases at once. - Unbounded reachability — some path of any length

With a binary predicate \(E(x,y)\) (read: there is an edge from x to y), one can express “there is a path from a to b of length at most 3,” or length at most 4, and so on—each by a separate sentence. What cannot be done with one sentence is: “there is a path of some length, however large.” The language can speak about any fixed length, but not “for any length, whichever turns out to be needed,” in a single shot. - Capturing exactly one intended infinite structure with a single theory

When familiar infinite objects (such as the natural numbers) are axiomatized using only this language, there will be other, unintended models that satisfy the same sentences. This practical limitation shapes how axiomatizations are designed and used.

Applications in Mathematics and Computer Science

Some uses rely directly on the language described so far; others point ahead to later topics.

- Foundations of mathematics: Taking one binary predicate symbol \(\in\) (read: “is an element of”), sets can be axiomatized step by step, and familiar mathematics can be rebuilt within the same logical framework.

- Databases: Many common queries are expressible with \(\forall\) and \(\exists\) over relations. For example:

- Every course has some enrolled student:

\(\forall c\; ( Course(c) \to \exists x Enrolled(x,c) )\). - A student attends all courses taught by \(p\):

\(\forall c\; ( Teaches(p,c) \to Takes(x,c) )\).

Integrity constraints like uniqueness of IDs are likewise sentences of the language.

- Every course has some enrolled student:

- Reasoning about programs: Program properties are naturally stated as logical conditions: for all inputs meeting \(P\), the output meets \(Q\). Tools can help discharge these obligations by deriving the required sentences from program descriptions.

- Automated and interactive proving: Automated provers and model finders work directly with sentences in this language, searching for derivations or counterexamples.

Summary

- Predicate logic goes beyond propositional logic by quantifying over individuals and naming relations or functions, enabling patterns such as uniqueness, relational behavior, and quantified statements.

- Certain global properties do not fit into a single sentence (finite vs. infinite size, unbounded reachability, and isolating a single intended infinite structure).

- Despite those boundaries, the language underpins formalization across mathematics, databases, program specification, and automated reasoning.

Exercises

- Uniqueness pattern: Using predicates \(Student(x)\),\( Course(c)\), and \(Enrolled(x,c)\), formalize: Exactly one student is enrolled in every course.

- Function behavior: With a unary function symbol \(f\), write sentences stating (a) different inputs give different outputs; (b) every element is hit by f; (c) combine (a) and (b).

- Quantifier order: Formalize both: (i) There is someone whom everyone admires, and (ii) Everyone admires someone, using a binary predicate \(Adm(x,y)\). Explain the difference in plain words.

- Paths of fixed length: With a binary predicate \(E(x,y)\), write a sentence saying there is a path from a to b of length at most 3. (Hint: existentially quantify intermediate nodes.)

- Database‑style constraint: Using \(Teaches(p,c)\) and \(Takes(x,c)\), express: For each student \(x\), there is some course \(c\) that \(x\) takes only if \(p\) does not teach \(c\).